How to Log Kafka Data to SQL Server

Open Automation Software can be configured to connect to Apache Kafka using the Kafka connector and log the data to a SQL Server database. This guide walks you through downloading and installing OAS, configuring a Kafka data source connector, configuring tags and logging them to a SQL Server database.

For this guide on how to log Kafka data to SQL Server you will need:

- A SQL Server database and user account with permission to create tables, procedures, indexes and read and write data

- Docker installed in Linux or Windows including the docker-compose utility

Info

If you have your own Kafka cluster deployed you can skip the steps for creating a Kafka cluster.

1 - Download and Install OAS

If you have not already done so, you will need to download and install the OAS platform.

Fully functional trial versions of the software are available for Windows, Windows IoT Core, Linux, Raspberry Pi and Docker on our downloads page.

On Windows, run the downloaded setup.exe file to install the Open Automation Software platform. For a default installation, Agree to the End User License Agreement and then click the Next button on each of the installation steps until it has completed.

If you'd like to customize your installation or learn more, use the following instructions:

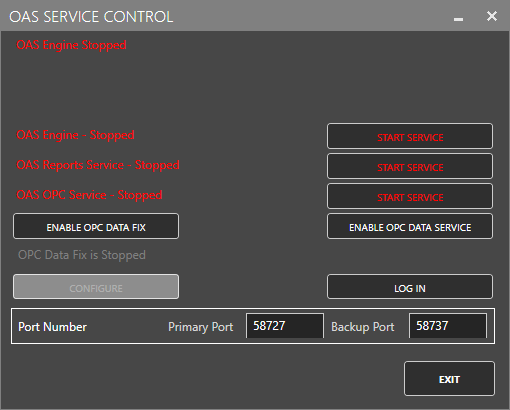

The OAS Service Control application will appear when the installation finishes on Windows.

Click on each START SERVICE button to start each of the three OAS services.

2 - Configure OAS

Configure OAS is the main application used to configure local and remote OAS instances.

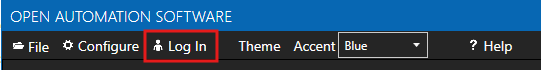

From your operating system start menu, open the Configure OAS application.

Select the Configure > Tags screen.

Important

If this is the first time you have installed OAS, the AdminCreate utility will run when you select a screen in the Configure menu. This will ask you to create a username and password for the admin user. This user will have full permissions in the OAS platform.

For further information see Getting Started - Security.

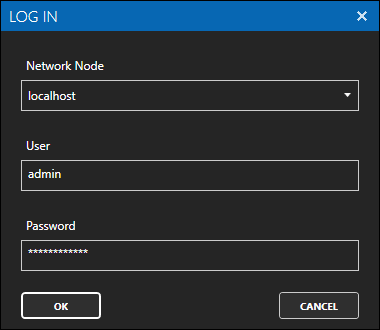

If this is the first time you are logging in, you will see the AdminCreate utility. Follow the prompts to set up your admin account. Otherwise, select the Log In menu button and provide the Network Node, username and password.

Info

In this guide you will use the Configure OAS application to configure the local Network Node which by default is localhost.

If you have installed OAS on a remote instance you can also connect to the remote instance by setting the relevant IP address or host name in the Network Node field.

3 - Create a Kafka test cluster using Docker

In this step you will create a local Kafka cluster. To simplify the deployment you will need to have Docker installed including the docker-compose utility. This method allows you to create a cluster very quickly for testing purposes and remove it again when you are done.

Create a new folder such as Kafka and inside the folder create a new

docker-compose.ymlfile with the following definition.services: zookeeper: image: confluentinc/cp-zookeeper:latest container_name: zookeeper ports: - "2181:2181" environment: ZOOKEEPER_CLIENT_PORT: 2181 ZOOKEEPER_TICK_TIME: 2000 kafka: image: confluentinc/cp-kafka:latest container_name: kafka ports: - "9092:9092" environment: KAFKA_BROKER_ID: 1 KAFKA_ZOOKEEPER_CONNECT: zookeeper:2181 KAFKA_ADVERTISED_LISTENERS: PLAINTEXT://localhost:9092 KAFKA_LISTENER_SECURITY_PROTOCOL_MAP: PLAINTEXT:PLAINTEXT KAFKA_OFFSETS_TOPIC_REPLICATION_FACTOR: 1Open a command line or bash terminal in the Kafka folder and use the following command to start a new container. This will download the image and all its dependencies and may take a few minutes.

docker-compose up -dCreate a new topic called temperature using the following command.

docker exec kafka kafka-topics --create --topic temperature --bootstrap-server localhost:9092 --partitions 1 --replication-factor 1

Info

Once you are done testing, you can stop and remove the containers that you created by using the following command:

docker-compose down

4 - Configure Kafka Consumer Connector

In the following steps you will create and configure a Kafka Connector connecting to a local Kafka cluster on port 9092. This connector will act as a Consumer where Tags can subscribe to a topic in the cluster.

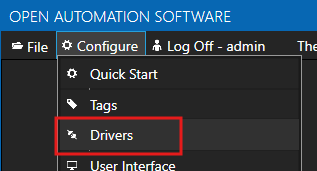

Select Configure > Drivers from the top menu.

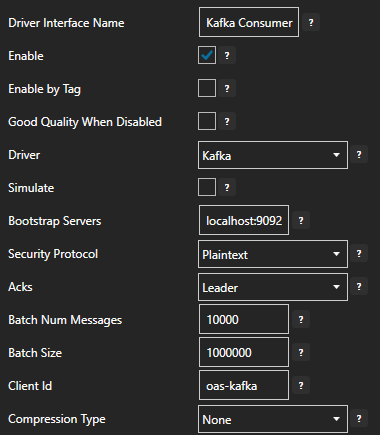

Set the Driver Interface Name to Kafka Consumer to give this driver interface instance a unique name.

Ensure the following parameters are configured:

- Driver: Kafka

- Bootstrap Servers: localhost:9092

- Security Protocol: Plaintext

- Client Id: oas-kafka

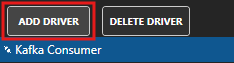

Click on the ADD DRIVER button on the left hand side to add this driver configuration. Once added, the driver interface name should appear in the list of drivers.

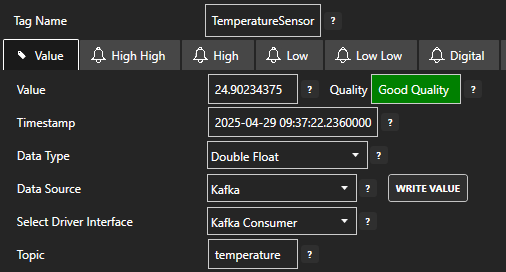

5 - Assign Kafka as Tag Data Source

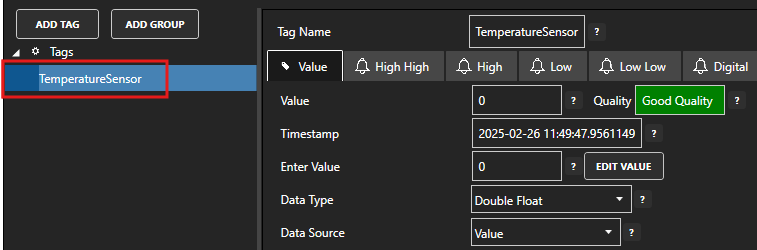

You will now set the Tag's data source to the Kafka driver interface that you created previously.

Select the Tag that will source data from a Kafka data source.

Set the Data Source to Kafka.

Set the Select Driver Interface drop-down to the Kafka Consumer interface created previously.

Set the Topic to temperature. This is the topic that you created previously when setting up the local Kafka cluster.

Click on the Apply Changes button to apply the changes.

To publish a data value from your Kafka cluster, you can use the following command.

docker exec -i kafka kafka-console-producer --topic temperature --bootstrap-server localhost:9092You can now type in any data such as a numeric value and it will be published to the temperature topic.

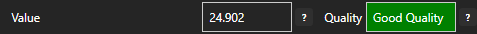

Check that the quality status is Good Quality and the data in the Value field is as expected.

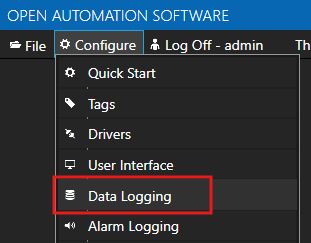

6 - Configure Data Logging

You will now configure data logging to a SQL Server database.

Select Configure > Data Logging from the top menu.

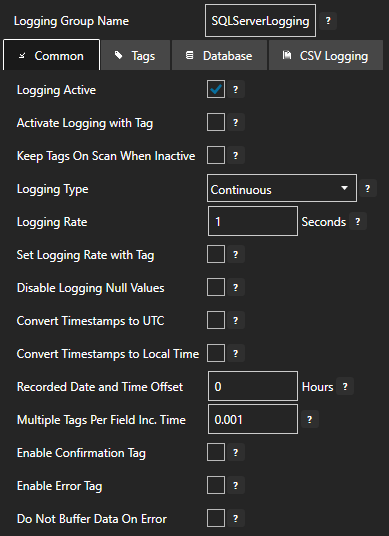

Enter a meaningful Logging Group Name to give this data logging group a unique name. A data logging group is simply a data logging configuration for a set of Tags.

On the Common tab leave all the default values. This will create a configuration that uses Continuous logging with a 1 second Logging Rate. This simply means, log data at a rate of 1 second.

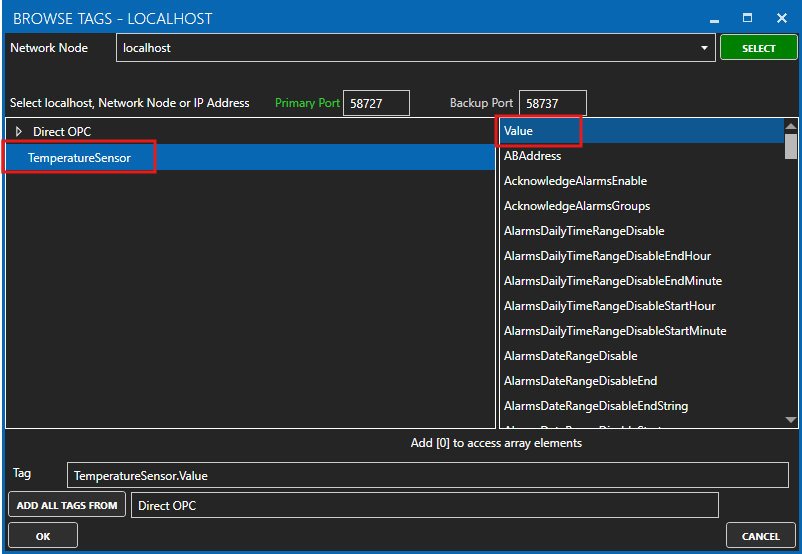

On the Tags tab you will add all of the Tags that you want to log. Each Tag will be a field (column) in the database. Click on the ADD button to bring up the Tag Browser.

Select the Tag you want to add in the left hand panel and then ensure the Value property is selected and then click on the OK button.

Tips

Each Tag has dozens of properties that are available for you to access. The Value property is the most commonly used property as it presents the Tag's current value. This is what we want to log to the database.

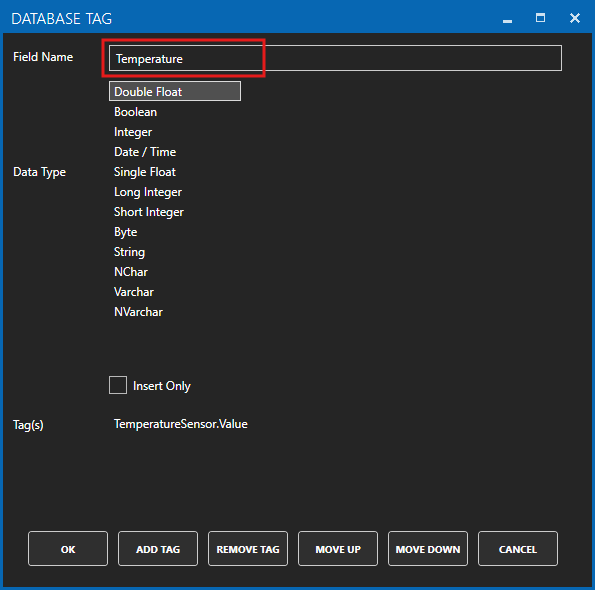

The Database Tag window will appear. This is where you set the database field name (column name) and the database data type that will be used. The most common types will be Double Float, Boolean, Integer and String.

Let's set the field name to Temperature. Now click on the OK button.

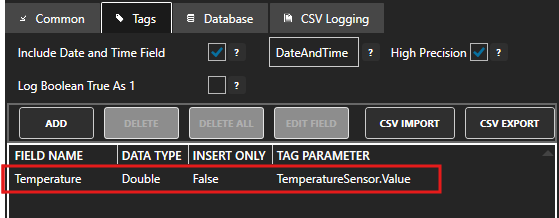

The Tag will appear in the list of Tags to be logged.

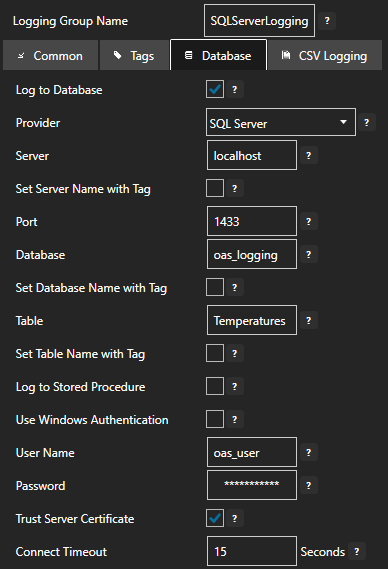

On the Database tab you will configure the database type and connection parameters:

- Tick the Log to Database checkbox to enable logging to a database

- Set Provider to SQL Server

- Set Server to your SQL Server instance (e.g., localhost or servername\instancename)

- Set Database to oas_logging

- Set Table to a table name such as Temperatures

- Set User Name to a user with permissions to manage the oas_logging database

- Set Password to the user password

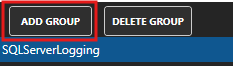

Click on the ADD GROUP button to add the data logging group. Once added, the data logging group name should appear in the list of logging groups.

Your database logging group is now active.

7 - Save Changes

Once you have successfully configured your OAS instances, make sure you save your configuration.

On each configuration page, click on the Save button.

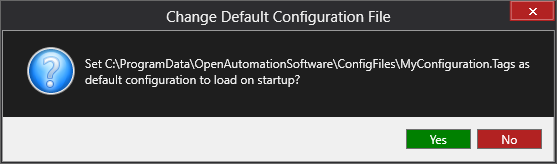

If this is the first time you are saving the configuration, or if you are changing the name of the configuration file, OAS will ask you if you want to change the default configuration file.

If you select Yes then OAS will make this configuration file the default and if the OAS service is restarted then this file will be loaded on start-up.

If you select No then OAS will still save your configuration file, but it will not be the default file that is loaded on start-up.

Important

Each configuration screen has an independent configuration file except for the Tags and Drivers configurations, which share the same configuration file. It is still important to click on the Save button whenever you make any changes.

For more information see: Save and Load Configuration

Info

- On Windows the configuration files are stored in C:\ProgramData\OpenAutomationSoftware\ConfigFiles.

- On Linux the configuration files are stored in the ConfigFiles subfolder of the OAS installation path.